ChatGPT, what isn’t it good for?

There’s a weird little revolution going on in AI right now. Contrary to the clickbait floating around on Twitter and the internet at large, it’s not coming for your head.

In November 2022, a company called OpenAI released the AI-based chatbot “ChatGPT” into the world. ChatGPT is based on OpenAI’s GPT-3 (“Generative Pre-trained Transformer 3”) language model. But before we get into all that, we need to go back to basics.

Let’s start with AI itself. AI is all about getting a computer to do things we ordinarily associate with people doing. For instance, have you ever used Optical Character Recognition (“OCR”), say, to convert a PDF into Word? That’s AI. Have you ever used a computer model to analyze weather data to predict the best time to plant your soybean crop? No? Okay. Well, that’s AI too.

But where AI’s gotten particularly impressive in recent years is in an area known as natural language processing, or “NLP.” To summarize this incredibly sophisticated and informationally dense field in a sentence: they’re teaching computers to talk. Well, “talk.” Whether it’s voice or text, the goal of NLP is to create machine systems which can understand and respond to verbal or written text in substantially the same way in which a human would. It’s intense stuff.

So, enter: OpenAI. OpenAI isn’t your typical company, even by Bay Area / Silicon Valley standards. It was founded in 2015 (relatively new); it counts Elon Musk, Peter Thiel, and Reid Hoffman among its founders (supergroup?); and it’s not out simply to make as much money as possible for shareholders (interesting, if true). There’s two sides to it: a non-profit research lab, and a for-profit limited partnership. Finally, there’s a mission: “to ensure that artificial general intelligence benefits all of humanity.” Ok.

So, now we get to GPT-3, the language model that underpins ChatGPT. Released in 2020, GPT-3 represents the cutting edge of text-generating AI. It’s so cutting-edge, in fact, that Microsoft acquired exclusive rights to use the underlying model shortly after its release. Sorry, what? Where’d the “Open” in OpenAI go? As you might expect, this pissed some people off.

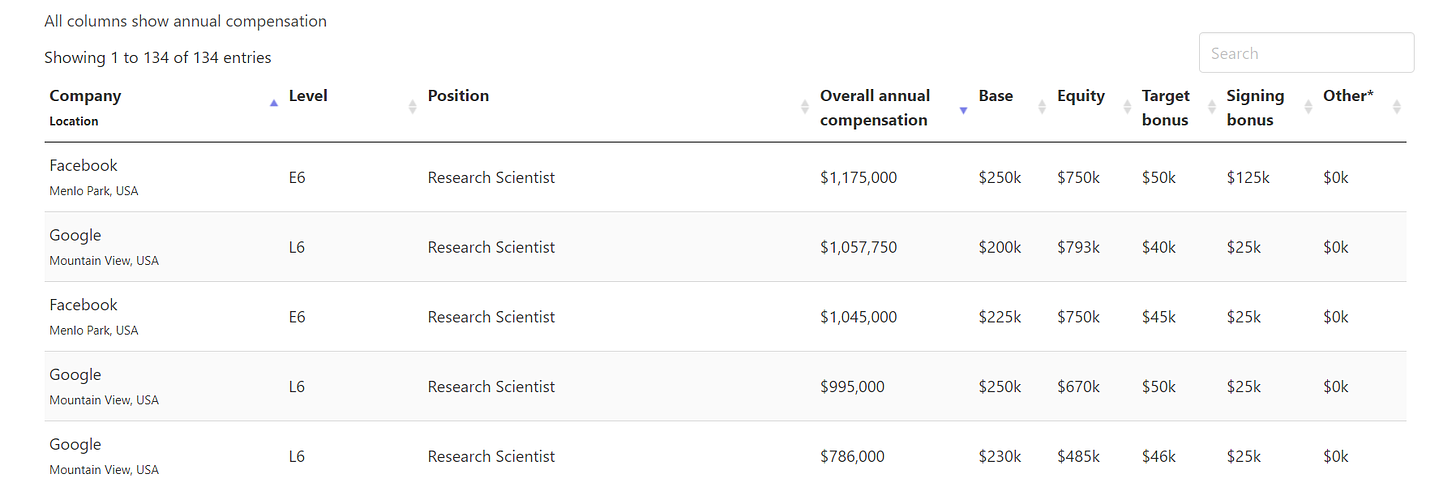

But, of course, tech development costs money. And it’s not just virtual machines / server time, either. In AI, it’s ironically the human capital that’s eye-poppingly expensive. Don’t believe me? Take a look at this:

(Parents, take note. It’s not just “lawyer” and “doctor” anymore. But I digress…)

And while OpenAI might not be competing in pay with the likes of Facebook / Meta or Google, it’s definitely paying its people well, as The New York Times reported back in 2018. It’s the whole “spend money to make money” bit, except here it’s more “spend money to…do good?” And all that money has to come from somewhere.

Mind you, OpenAI has stated publicly that its deal with Microsoft won’t impact public users’ use of GPT-3 via OpenAI’s API, in keeping with the whole “open” thing. But this then just yields another question: what, then, did Microsoft actually license? As you might imagine, there’s not exactly a ton of information floating around publicly about the terms of the deal itself. But Microsoft seems more than happy with their investment; years thereafter, they’ve just poured a few billion more in. As for where this gets applied in their suite of services? For starters, generating app / software code from natural language conversation, i.e., the potential to simply “speak” an app into existence. And that’s just…starters.

But okay, we’ve spent a bunch of time talking about OpenAI, GPT-3, and the economics of AI development; now, can we get to the show? It’s time to talk about ChatGPT.

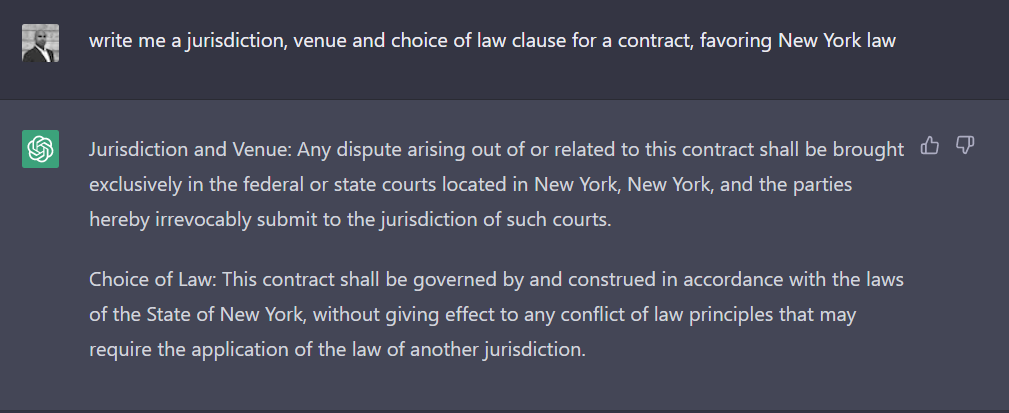

ChatGPT is exactly what it sounds like: a portal for folks like you and me to “talk to” the GPT-3 AI. One might ask it to explain the significance of the ducks in The Catcher In The Rye. Or how about how much oil is used in lubricating wind energy turbines? Maybe craft the choice of law clause in a contract? The list of use cases is endless.

So, why did I pick the ducks in The Catcher In The Rye, of all things? Think back to high school English. The novel’s still a staple in most curricula (I hope?). And the ducks are a fairly common essay or short answer topic. Are you thinking what I’m thinking? Because yes, a high schooler could definitely use ChatGPT to cheat. So, how to prevent this? More AI, obviously. Like everything else in this world, it’s all just cats and mice.

But what about in a professional context? Like that choice of law clause? As someone who drafts those with perhaps mind-numbing regularity, I thought I’d give ChatGPT a chance at taking my job.

Honestly, not a bad attempt, at all. Does it catch everything? No. (Forum non conveniens, anyone?) But the issue here isn’t whether ChatGPT did a fair or poor job; it’s that it doesn’t know what it doesn’t know. I knew something it was missing. I reprompted, and at first, got an answer that preserved forum non conveniens as a defense. I reprompted again, and ChatGPT eventually got there, but with some pretty jacked up phrasing. (“Jacked up” here being a term of art within the legal profession.”)

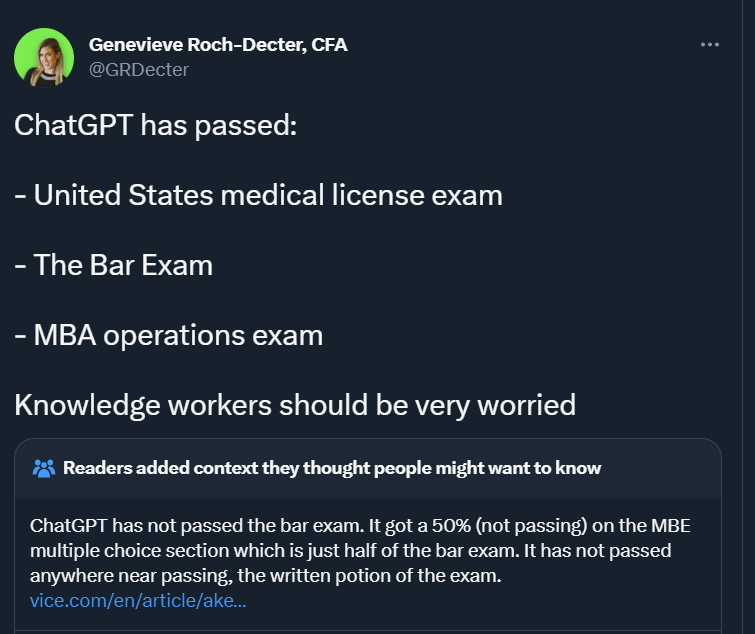

So, where does this leave us? Well, hmm. Am I worried about ChatGPT—or any other AI service, really—making lawyers unnecessary anytime soon? Molon labe, computer. The answer is no, not even a little bit, though bad takes on Twitter and in “news” headlines would have me feel otherwise. But am I impressed with the tech? You bet.

At the end of the day, ChatGPT can be a tool for professionals, but it can’t quite be those professionals. The goal here, at least for someone like me, is to figure out how to use it to save time and deliver services more efficiently. That’s where the value today is. Would I trust ChatGPT to do legal research for me? Not a chance. Respond to a client e-mail? Nope. But could I make use of a GPT-based extension in Word to help make formatting changes to a brief? 100%.

So, my point: don’t believe the nonsense headlines about how “ChatGPT is going to replace you with AI.” It fully isn’t, because it fully cannot. The problem with the prevailing “discourse” (if one can even call that sort of click-baity nonsense “discourse”), however, is that it backs a lot of folks into a corner of ignorance, because people often ignore things that make them uncomfortable. It’s too easy to go from “AI can’t possibly replace me” (true) to “AI isn’t worth thinking about because it’s irrelevant to me” (false), and the latter is asymmetrically perilous. In the long run, those who more readily adopt AI as a means of building, inter alia, further business efficiency, are those who will profit most. It’s the gatekeeping-by-fear that’s the real problem here. Don’t fall into the trap.